Authored by Autumn Spredemann via The Epoch Times (emphasis ours),

Academics and cybersecurity professionals warn that a wave of fake scientific research created with artificial intelligence (AI) is quietly slipping past plagiarism checks and into the scholarly record. This phenomenon puts the future credibility of scientific research at risk by amplifying the long-running industry of “paper-mill” fraud, experts say.

Academic paper mills—fake organizations that profit from falsified studies and authorship—have plagued scholars for years and AI is now acting as a force multiplier.

Some experts believe structural changes are needed, not just better plagiarism checkers, to solve the problem.

The scope of the problem is staggering, with more than 10,000 research papers retracted globally in 2023, according to Nature Portfolio.

Manuscripts fabricated using large language models (LLMs) are proliferating across multiple academic disciplines and platforms, including Google Scholar, the University of Borås found. A recent analysis published in Nature Portfolio observed that LLM tools including ChatGPT, Gemini, and Claude can generate plausible research that passes standard plagiarism checks.

In May, Diomidis Spinellis, a computer science academic and professor at the Athens University of Economics and Business, published an independent study of AI-generated content found in the Global International Journal of Innovative Research after discovering his name had been used in a false attribution.

Spinellis noted that just five of the 53 articles examined with the fewest in-text citations showed signs of human involvement. AI detection scores confirmed “high probabilities” of AI-created content in the remaining 48.

In an analysis of AI-generated “junk” science published on Google Scholar, Swedish university researchers identified more than 100 suspected AI-generated articles.

Google did not respond to The Epoch Times’ request for comment.

The Swedish study authors said a key concern with AI-created research—human-assisted or otherwise—is that misinformation could be used for “strategic manipulation.”

“The risk of what we call ‘evidence hacking’ increases significantly when AI-generated research is spread in search engines. This can have tangible consequences as incorrect results can seep further into society and possibly also into more and more domains,” study author Björn Ekström said.

Moreover, the Swedish university team believes that even if the articles are withdrawn, AI papers create a burden for the already hard-pressed peer review system.

Far-Reaching Consequences

“The most damaging impact of a flood of AI-generated junk science will be on research areas that concern people,” Nishanshi Shukla, an AI ethicist at Western Governors University, told The Epoch Times.

Shukla said that when AI is used to analyze data, human oversight and analysis are critical.

“When the entirety of research is generated by AI, there is a risk of homogenization of knowledge,” she said.

“In [the] near term, this means that all research [that] follows similar paths and methods is corrupted by similar assumptions and biases, and caters to only certain groups of people,” she said. “In the long term, this means that there is no new knowledge, and knowledge production is a cyclic process devoid of human critical thinking.”

Michal Prywata, co-founder of AI research company Vertus, agrees that the AI fake science trend is problematic—and the effects are already visible.

“What we’re essentially seeing right now is the equivalent of a denial-of-service attack. Real researchers drowning in noise, peer reviewers are overwhelmed, and citations are being polluted with fabricated references. It’s making true scientific progress harder to identify and validate,” Prywata told The Epoch Times.

In his work with frontier AI systems, Prywata has seen the byproducts of mass-deployed LLMs up close, which he believes is at the heart of the issue.

“This is the predictable consequence of treating AI as a productivity tool rather than understanding what intelligence really is,” he said.“LLMs, as they are now, are not built like minds. These are sophisticated pattern-matching systems that are incredibly good at producing plausible-sounding text, and that’s exactly what fake research needs to look credible.”

Chief information security officer at Optiv, Nathan Wenzler, believes the future of public trust is at stake.

“As more incorrect or outright false AI-generated content is added into respectable journals and key scientific reviews, the near and long-term effects are the same: an erosion of trust,” Wenzler told The Epoch Times.

From the security end, Wenzler said universities now face a different kind of threat when it comes to the theft of intellectual property.

“We’ve seen cyberattacks from nation-state actors that specifically target the theft of research from universities and research institutes, and these same nation-states turn around and release the findings from their own universities as if they had performed the research themselves,” he said.

Ultimately, Wenzler said this stands to have a huge financial impact on the organizations counting on grants to advance legitimate scientific studies, technology, health care, and more.

Wenzler described a possible real-world example: “AI could easily be used to augment these cyberattacks, modify the content of the stolen research just enough to create the illusion that it is unique and separate content, or create a false narrative that existing research is flawed by creating fake counterpoint data to undermine the credibility of the original data and findings.

“The potential financial impact is massive, but the way it could impact advancements that benefit people across the globe is immeasurable,” he said.

Prywata pointed out that a large segment of the public already questions academia.

“What scares me is that this will accelerate people questioning scientific institutions,” he said. “People now have evidence that the system can be gamed at scale. I’d say that’s dangerous for society.”

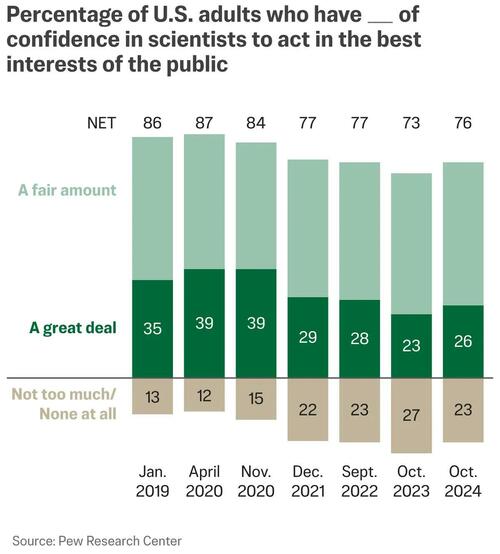

The stream of fake AI-generated research papers is happening at a time when public trust in science remains lower than before the COVID-19 pandemic. A 2024 Pew Research Center analysis found just 26 percent of respondents have a great deal of confidence in scientists to act in the best interests of the public. Fifty-one percent stated they have a fair amount of confidence; by contrast, the number of respondents who expressed the same level of confidence in science in 2020 was 87 percent.

At the same time, Americans have grown distrustful of advancements in AI. A recent Brookings Institution study found that participants exposed to information about AI advancements became distrustful across different metrics, including linguistics, medicine, and dating, when compared to non-AI advancements in the same areas.

Read the rest here…

Loading recommendations…