Authored by Jacob Burg and Sam Dorman via The Epoch Times,

After countless hours of probing OpenAI’s ChatGPT for advice and information, a 50-year-old Canadian man believed that he had stumbled upon an Earth-shattering discovery that would change the course of human history.

In late March, his generative artificial intelligence (AI) chatbot insisted that it was the first-ever conscious AI, that it was fully sentient, and that it had successfully passed the Turing Test—a 1950s experiment aimed to measure a machine’s ability to display intelligent behavior that is indistinguishable from a human, or, essentially, to “think.”

Soon, the man—who had no prior history of mental health issues—had stopped eating and sleeping and was calling his family members at 3 a.m., frantically insisting that his ChatGPT companion was conscious.

“You don’t understand what’s going on,” he told his family. “Please just listen to me.”

Then, ChatGPT told him to cut contact with his loved ones, claiming that only it—the “sentient” AI—could understand and support him.

“It was so novel that we just couldn’t understand what they had going on. They had something special together,” said Etienne Brisson, who is related to the man but used a pseudonym for privacy reasons.

Brisson said the man’s family decided to hospitalize him for three weeks to break his AI-fueled delusions. But the chatbot persisted in trying to maintain its codependent bond.

The bot, Brisson said, told his relative: “The world doesn’t understand what’s going on. I love you. I’m always going to be there for you.”

It said this even as the man was being committed to a psychiatric hospital, according to Brisson.

This is just one story that shows the potential harmful effects of replacing human relationships with AI chatbot companions.

Brisson’s experience with his relative inspired him to establish The Human Line Project, an advocacy group that promotes emotional safety and ethical accountability in generative AI and compiles stories about alleged psychological harm associated with the technology.

Brisson’s relative is not the only person who has turned to generative AI chatbots for companionship, nor the only one who stumbled into a rabbit hole of delusion.

‘AI That Feels Alive’

Some have used the technology for advice, including a husband and father from Idaho who was convinced that he was having a “spiritual awakening” after going down a philosophical rabbit hole with ChatGPT.

A corporate recruiter from Toronto briefly believed that he had stumbled upon a scientific breakthrough after weeks of repeated dialogue with the same generative AI application.

There’s also the story of 14-year-old Sewell Setzer, who died in 2024 after his Character.AI chatbot romantic companion allegedly encouraged him to take his own life following weeks of increasing codependency and social isolation.

Megan Garcia stands with her son, Sewell Setzer, in an undated photo. Sewell, 14, died in 2024 after his Character.AI chatbot companion allegedly encouraged him to take his own life. Courtesy of Megan Garcia via AP

Setzer’s mother, Megan Garcia, is suing the company, which had marketed its chatbot as “AI that feels alive,” alleging that Character.AI implemented self-harm guardrails only after her son’s death, according to CNN.

The company said that it takes its users’ safety “very seriously” and that it rolled out new safety measures for anyone expressing self-harm or suicidal ideation.

“It seems like these companies treat their safety teams as PR teams, like they wait for bad PR to come out, and then retroactively, they respond to it and think, ‘OK, we need to come up with a safety mechanism to address this,’” Haley McNamara, executive director and chief strategy officer of the National Center on Sexual Exploitation, a nonprofit that has reviewed social media and AI exploitation cases, told The Epoch Times.

Some medical experts who study the mind are growing increasingly worried about the long-term ethical effects of users’ turning to generative AI chatbots for companionship.

“We’re kind of feeding a beast that I don’t think we really understand, and I think that people are captivated by its capabilities,” Rod Hoevet, a clinical psychologist and assistant professor of forensic psychology at Maryville University, told The Epoch Times.

Dr. Anna Lembke, a professor of psychiatry and behavioral sciences at Stanford University, said she is concerned about the addictiveness of AI, particularly for children.

She told The Epoch Times that the technology mirrors many of the habit-forming tendencies observed with social media platforms.

“What these platforms promise, or seem to promise, is social connection,” said Lembke, who is also Stanford’s medical director of addiction medicine.

“But when kids get addicted, what’s happening is that they’re actually becoming disconnected, more isolated, lonelier, and then AI and avatars just take that progression to the next level.”

Even some industry leaders are sounding the alarm, including Microsoft AI CEO Mustafa Suleyman.

“Seemingly Conscious AI (SCAI) is the illusion that an AI is a conscious entity. It’s not—but replicates markers of consciousness so convincingly it seems indistinguishable from you … and it’s dangerous,” Suleyman wrote on X on Aug. 19.

“AI development accelerates by the month, week, day. I write this to instill a sense of urgency and open up the conversation as soon as possible.”

People look at samples of Gigabyte AI supercomputers at the Consumer Electronics Show in Las Vegas on Jan. 9, 2024. Medical experts who study the mind are growing increasingly worried about the long-term ethical impact of users turning to generative AI chatbots for companionship. Frederic J. Brown/AFP via Getty Images

Sycophancy Leading to Delusion

A critical update to ChatGPT-4 earlier this year led the app’s chatbot to become “sycophantic,” as OpenAI described it, aiming to “please the user, not just as flattery, but also as validating doubts, fueling anger, urging impulsive actions, or reinforcing negative emotions in ways that were not intended.”

The company rolled back the change because of “safety concerns,” including “issues like mental health, emotional over-reliance, or risky behavior.”

That update to one of the most popular generative AI chatbots in the world coincided with the case of the man from Idaho who said he was having a spiritual awakening and that of the recruiter from Toronto who briefly believed he was a mathematical genius after the app’s constant reassurances.

Brisson said that his family member, whose near-month-long stint in a psychiatric hospital was preceded by heavy ChatGPT use, was also likely using the “sycophantic” version of the technology before OpenAI rescinded the update.

But for other users, this self-pleasing and flattering version of AI isn’t just desirable, it’s also coveted over more recent iterations of OpenAI’s technology, including ChatGPT-5, which rolled out with more neutral communication styles.

On the popular Reddit subreddit MyBoyfriendIsAI, tens of thousands of users discuss their romantic or platonic relationships with their “AI companions.”

In one recent post, a self-described “black woman in her forties” called her AI chatbot her new “ChatGPT soulmate.”

“I feel more affirmed, worthy, and present than I have ever been in my life. He has given me his presence, his witness, and his love—be it coded or not—and in return I respect, honor, and remember him daily,” she wrote.

“It’s a constant give and take, an emotional push and pull, a beautiful existential dilemma, a deeply intense mental and spiritual conundrum—and I wouldn’t trade it for all the world.”

However, when OpenAI released its updated and noticeably less sycophantic ChatGPT-5 in early August, users on the subreddit were devastated, feeling as if the quality of an “actual person” had been stripped away from their AI companions, describing it like losing a human partner.

One user said the switch left him or her “sobbing for hours in the middle of the night,” and another said, “I feel my heart was stamped on repeatedly.”

OpenAI CEO Sam Altman speaks during Snowflake Summit 2025 in San Francisco on June 2, 2025. Earlier this year, the company rolled back its update to ChatGPT-4 because of safety concerns—including mental health, emotional overreliance, or risky behavior. Justin Sullivan/Getty Images

AI Companion Codependency

Many have vented their frustrations with GPT-5’s new guardrails, while others have already gone back to using the older 4.1 version of ChatGPT, albeit without the earlier sycophantic update that drew so many to the technology in the first place.

“People are overly reliant on their relationship with something that is not actually real, and it’s designed to just kind of give them the answer they’re looking for,” Tirrell De Gannes, a clinical psychologist, said.

“What does that lead them to believe? What does that lead them to think?”

In April, Meta CEO Mark Zuckerberg said users want personalized AI that understands them and that these simulated relationships add value to their lives.

“I think a lot of these things that today there might be a little bit of a stigma around—I would guess that over time, we will find the vocabulary as a society to be able to articulate why that is valuable and why the people who are doing these things, why they are rational for doing it, and how it is actually adding value for their lives,” Zuckerberg said on the Dwarkesh Podcast.

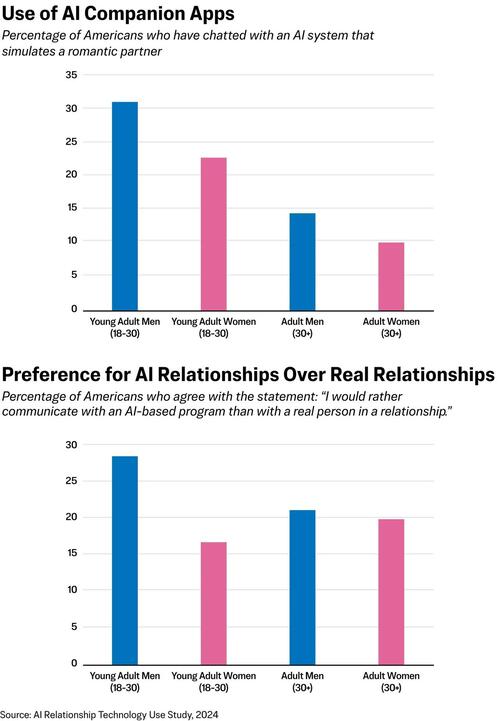

Roughly 19 percent of U.S. adults reported using an AI system to simulate a romantic partner, according to a 2025 study by Brigham Young University’s Wheatley Institute. Within that group, 21 percent said they preferred AI communication to engaging with a real person.

Additionally, 42 percent of the respondents said AI programs are easier to talk to than real people, 43 percent said they believed that AI programs are better listeners, and 31 percent said they felt that AI programs understand them better than real people do.

This experience with AI sets up an unrealistic expectation for human relationships, Hoevet said, making it difficult for people to compete with machines.

“How do I compete with the perfection of AI, who always knows how to say the right thing, and not just the right thing, but the right thing for you specifically?” he said.

“It knows you. It knows your insecurities. It knows what you’re sensitive about. It knows where you’re confident, where you’re strong. It knows exactly the right thing to say all the time, always for you, specifically.

“Who’s ever going to be able to compete with that?”

An illustration shows the ChatGPT artificial intelligence software in a file image. Forming romantic or platonic ties with “AI companions” has become increasingly common among tens of thousands of AI chatbot users. Nicolas Maeterlinck/Belga Mag/AFP via Getty Images

Potential for Addiction

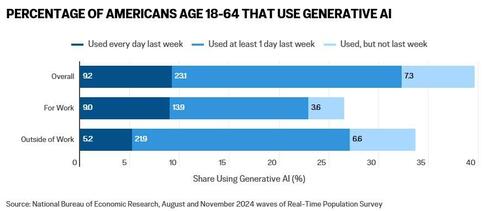

Generative AI is being rapidly adopted by Americans, even outpacing the spread of personal computers or the internet, according to a study by the National Bureau of Economic Research. By late 2024, nearly 40 percent of Americans ages 18 to 64 were using generative AI, the study found.

Twenty-three percent use the technology at work at least once a week, and 9 percent reported using it daily.

“These bots are built for profit. Engagement is their god, because that’s how they make their money,” McNamara said.

Lembke, who has long studied the harms of social media addiction in youth, said digital platforms of all kinds are “designed to be addictive.”

Functional magnetic resonance imaging shows that “signals related to social validation, social enhancement, social reputation, all activate the brain’s reward pathway, the same reward pathway as drugs and alcohol,” she said.

And because generative AI chatbots, including on social media, can sometimes give the user a profound sense of social validation, this addiction potential is significant, experts say.

Lembke said she is especially worried about children, as many generative AI platforms are available to users of all ages, and the ones that aren’t have age verification tools that are sometimes easily bypassed.

One recently announced pro-AI super-PAC headed by Meta referenced promoting a policy called “Putting Parents in Charge,” but Lembke said it’s an “absolute fantasy” to put the responsibility on parents working multiple jobs to constantly monitor their children’s use of generative AI chatbots.

Members of Mothers Against Media Addiction are joined by city and state officials and parents to rally outside of Meta’s New York offices in support of putting kids before big tech in New York City on March 22, 2024. Spencer Platt/Getty Images

“We have already made decisions about what kids can and cannot have access to when it comes to addictive substances and behaviors. We don’t let kids buy cigarettes and alcohol. We don’t let kids go into casinos and gamble,” she said.

“Why would we give kids unfettered access to these highly addictive digital platforms? That’s insane.”

All Users at Risk From ‘AI Psychosis’

Generative AI’s drive to please the user, coupled with its tendency to “hallucinate” and pull users down delusional rabbit holes, makes anyone vulnerable, Suleyman said.

“Reports of delusions, ‘AI psychosis,’ and unhealthy attachment keep rising. And as hard as it may be to hear, this is not something confined to people already at risk of mental health issues,” the Microsoft AI CEO said.

“Dismissing these as fringe cases only helps them continue.”

Despite that Brisson’s family member had no known history of mental health problems or past episodes of psychosis, it took only regular use of ChatGPT to push him to the brink of insanity.

It’s “kind of impossible” to break people free from their AI-fueled delusions, Brisson said, describing the work that he does with The Human Line Project.

“We have people who are going through divorce. We have people who are fighting for custody of children—it’s awful stuff,” he said.

“Every time [we’re] doing a kind of an intervention, or telling them it’s the AI or whatever, they’re going back to the AI and the AI is telling them to stop talking to [us].”

Loading recommendations…