Authored by William Janeway via Project Syndicate,

The rise of generative AI has triggered a global race to build semiconductor plants and data centers to feed the vast energy demands of large language models. But as investment surges and valuations soar, a growing body of evidence suggests that financial speculation is outpacing productivity gains.

In recent weeks, the notion that we are witnessing an “AI Bubble” has moved from the fringes of public debate to the mainstream. As Financial Times commentator Katie Martin aptly put it, “Bubble-talk is breaking out everywhere.”

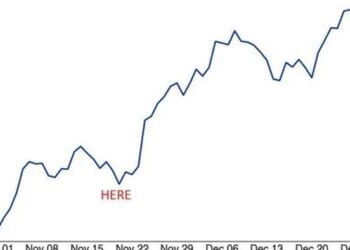

The debate is fueled by a surge of investment in data centers and in the vast energy infrastructure required to train and operate the large language models (LLMs) that drive generative AI. As with previous speculative bubbles, rising investment volumes fuel soaring valuations, with both reaching historic highs across public and private markets. The so-called “Magnificent Seven” tech giants – Alphabet, Amazon, Apple, Meta, Microsoft, Nvidia, and Tesla – dominate the S&P 500, with each boasting a market capitalization above $1 trillion, and Nvidia is now the world’s first $5 trillion company.

In the private market, OpenAI reportedly plans to raise $30 billion at a $500 billion valuation from SoftBank, the most exuberant investor of the post-2008 era. Notably, this fundraising round comes even as the company’s losses totaled $5 billion in 2024 despite $3.7 billion in revenue with its cash burn expected to total $115 billion through 2029.

Much like previous speculative cycles, this one is marked by the emergence of creative financing mechanisms. Four centuries ago, the Dutch Tulip Mania gave rise to futures contracts on flower bulbs. The 2008 global financial crisis was fueled by exotic derivatives such as synthetic collateralized debt obligations and credit default swaps. Today, a similar dynamic is playing out in the circular financing loop that links chipmakers (Nvidia, AMD), cloud providers (Microsoft, CoreWeave, Oracle), and LLM developers like OpenAI.

While the contours of an AI bubble are hard to miss, its actual impact will depend on whether it spills over from financial markets into the broader economy. How – and whether – that shift will occur remains unclear. Virtually every day brings announcements of new multibillion-dollar AI infrastructure projects. At the same time, a growing body of reports indicates that AI’s business applications are delivering disappointing returns, indicating that the hype may be running well ahead of reality.

The Ghosts of Bubbles Past

Financial bubbles can be understood in terms of their focus and locus. The first concerns what investors are betting on: Do the assets that attract speculation have the potential to boost economic productivity when deployed at scale? Second, is this activity concentrated primarily in equity or credit markets? It is debt-financed speculation that leads to economic disaster when a bubble inevitably bursts. As Moritz Schularick and Alan M. Taylor have shown, leverage-fueled bubbles have repeatedly triggered financial crises over the past century and a half.

The credit bubble of 2004-07, which focused on real estate and culminated in the global financial crisis of 2008-09, is a case in point. It offered no promise of increased productivity, and when it burst, the economic consequences were horrendous, prompting unprecedented public underwriting of private losses, principally by the US Federal Reserve.

By contrast, the focus of the tech bubble of the late 1990s was on building the internet’s physical and logical infrastructure on a global scale, accompanied by the first wave of experiments in commercial applications. Speculation during this period was mainly concentrated in public equity markets, with some spillover into the market for tradable junk bonds, and overall leverage remained limited. When the bubble burst, the resulting economic damage was relatively modest and was easily contained through conventional monetary policy.

The history of modern capitalism has been defined by a succession of such “productive bubbles.” From railroads to electrification to the internet, waves of financial speculation have repeatedly mobilized vast quantities of capital to fund potentially transformational technologies whose returns could not be known in advance.

In each of these cases, the companies that built the foundational infrastructure went bust. Speculative funding had enabled them to build years before trial-and-error experimentation yielded economically productive applications. Yet no one tore up the railroad tracks, dismantled the electricity grids, or dug up the underground fiber-optic cables. The infrastructure remained, ready to support the creation of the imagined “new economy,” albeit only after a painful delay and largely with new players at the helm. The experimentation needed to discover the “killer applications” enabled by these “General Purpose Technologies” takes time. Those seeking instant gratification from LLMs are likely to be disappointed.

For example, while construction of the first railroad in the United States began in 1828, mail-order retail, the killer app in this instance, began with the founding of Montgomery Ward in 1872. Ten years later, Thomas Edison introduced the Age of Electricity by turning on the Pearl Street power station, but the productivity revolution in manufacturing caused by electrification only came in the 1930s. Similarly, it took a generation to get from the Otto internal combustion engine, invented in 1876, to Henry Ford’s Model T in 1908, and from Jack Kilby’s integrated circuit (1958) to the IBM PC (1981). The first demonstration of the proto-internet was in 1972: Amazon and Google were founded in 1994 and 1998, respectively.

Where does the AI bubble fit on this spectrum? While much of the investment so far has come from Big Tech’s vast cash reserves and continued cash flow, signs of leverage are beginning to emerge. For example, Oracle, a late entrant to the race, is compensating for its relatively limited liquidity with a debt package of about $38 billion.

And that may be only the beginning. OpenAI has announced plans to invest at least $1 trillion over the next five years. Given that spending of this scale will inevitably require large-scale borrowing, LLMs have a narrow window to prove their economic value and justify such extraordinary levels of investment.

Early studies offered reason for optimism. Research by Stanford’s Erik Brynjolfsson and MIT’s Danielle Li and Lindsey Raymond, examining the introduction of generative AI in customer-service centers, found that AI assistance increased worker productivity by 15%. The biggest gains were among less experienced employees, whose productivity rose by more than 30%.

Brynjolfsson and his co-authors also observed that employees who followed AI recommendations became more efficient over time, and that exposure to AI tools led to lasting skill improvements. Moreover, customers treated AI-assisted agents more positively, showing higher satisfaction and making fewer requests to speak with a supervisor.

The broader picture, however, appears less encouraging. A recent survey by MIT’s Project NANDA found that 95% of private-sector generative AI pilot projects are failing. Although less rigorous than Brynjolfsson’s peer-reviewed study, the survey suggests that most corporate experiments with generative AI have fallen short of expectations. The researchers attributed these failures to a “learning gap” between the few firms that obtained expert help in tailoring applications to practical business needs – chiefly back-office administrative tasks – and those that tried to develop in-house systems for outward-facing functions such as sales and marketing.

The Limits of Generative AI

The main challenge facing generative AI users stems from the nature of the technology itself. By design, GenAI systems transform their training data – text, images, and speech – into numerical vectors which, in turn, are analyzed to predict the next token: syllable, pixel, or sound. Since they are essentially probabilistic prediction engines, they inevitably make random errors.

Earlier this year, the late Brian Cantwell Smith, former chief scientist at Xerox’s legendary Palo Alto Research Center, succinctly described the problem. As quoted to me by University of Edinburgh Professor Henry Thompson, Smith observed: “It’s not good that [ChatGPT] says things that are wrong, but what is really, irremediably bad is that it has no idea that there is a world about which it is mistaken.”

The inevitable result is errors of different sorts, the most damaging of which are “hallucinations” – statements that sound plausible but describe things that don’t actually exist. This is where context becomes critical: in business settings, tolerance for error is already low and approaches zero when the stakes are high.

Code generation is a prime example. Software used in financially or operationally sensitive environments must be rigorously tested, edited, and debugged. A junior programmer equipped with generative AI can produce code with remarkable speed. But that output still requires careful review by senior engineers. As numerous anecdotes circulating online suggest, any productivity gained at the front end can disappear once the resources needed for testing and oversight are taken into account. The Bulwark’s Jonathan Last put it well:

“AI is like Chinese machine production. It can create good outputs at an incredibly cheap price (measuring here in the cost of human time). Which means that AI – as it exists today – is a useful tool, but only for tasks that have a high tolerance for errors … if I asked ChatGPT to research a topic for me and I incorporated that research into a piece I was writing and it was only 90 percent correct, then we have a problem. Because my written product has a low tolerance for errors.”

In her new book The Measure of Progress, University of Cambridge economist Diane Coyle highlights another major concern: AI’s opacity. “When it comes to AI,” she recently wrote, “some of the most basic facts are missing or incomplete. For example, how many companies are using generative AI, and in which sectors? What are they using it for? How are AI tools being applied in areas such as marketing, logistics, or customer service? Which firms are deploying AI agents, and who is actually using them?”

The Inevitable Reckoning

This brings us to the central question: What is the value-creating potential of LLMs? Their insatiable appetite for computing power and electricity, together with their dependence on costly oversight and error correction, makes profitability uncertain. Will business customers generate enough profitable revenue to justify the required investment in infrastructure and human support? And if several LLMs perform at roughly the same level, will their outputs become commodified, reducing token production to a low-margin business?

From railroads to electrification to digital platforms, massive upfront investment has always been required to deliver the first unit of service, while the marginal cost of each additional unit rapidly declined, often falling below the average cost needed to recover the initial investment. Under competitive conditions, prices tend to gravitate toward marginal cost, leaving all competitors operating at a loss. The result, time and again, has been regulated monopolies, cartels, or other “conspiracies in restraint of trade,” to borrow the language of the Sherman Antitrust Act.

There are two distinct alternatives to enterprise-level LLM deployment. One lies in developing small language models – systems trained on carefully curated datasets for specific, well-defined tasks. Large institutions, such as JPMorgan or government agencies, could build their own vertical applications, tailored to their needs, thereby reducing the risk of hallucinations and lowering oversight costs.

The other alternative is the consumer market, where AI providers compete for attention and advertising revenue with the established social-media platforms. In this domain, where value is often measured in entertainment and engagement, anything goes. ChatGPT reportedly has 800 million “weekly active users” – twice as many as it had in February. OpenAI appears poised to follow up with an LLM-augmented web browser, ChatGPT Atlas.

But given that Google’s and Apple’s browsers are free and already integrate AI assistants, it is unclear whether OpenAI can sustain a viable subscription or pay-per-token revenue model that justifies its massive investments. Various estimates suggest that only about 11 million users – roughly 1.5% of the total – currently pay for ChatGPT in any form. So, consumer-focused LLMs may be condemned to bid for advertising revenue in an already-mature market.

The outcome of this ongoing horse race is impossible to call. Will LLMs eventually generate positive cash flow and cover the energy costs of operating them at scale? Or will the still-nascent AI industry fragment into a patchwork of specialized, niche providers while the largest companies compete with established social-media platforms, including those owned by their corporate investors? As and when markets recognize that the industry is splintering rather than consolidating, the AI bubble will be over.

Ironically, an earlier reckoning might benefit the broader ecosystem, though it would be painful for those who bought in at the peak. Such a deflation could prevent many of today’s ambitious data-center projects from becoming stranded assets, akin to the unused railroad tracks and dark fibers left behind by past bubbles. In financial terms, it would also preempt a wave of high-risk borrowing that might end in yet another leveraged bubble and crash.

Most likely, a truly productive bubble will emerge only years after today’s speculative frenzy has cooled. As the Gartner Hype Cycle makes clear, a “trough of disillusionment” precedes the “plateau of productivity.” Timing may not be everything in life, but for investment returns it pretty well is.

Loading recommendations…