Today’s big story in the world of AI comes from the WSJ, which reports that OpenAI boss Sam Altman “told employees Monday that the company was declaring a “code red” effort to improve the quality of ChatGPT and delaying other products as a result.”

The report notes that according to Altman “OpenAI had more work to do on the day-to-day experience of its chatbot, including improving personalization features for users, increasing its speed and reliability, and allowing it to answer a wider range of questions.”

He told employees the $500bn start-up was planning to delay other products as “we are at a critical time for ChatGPT”.

As a result, OpenAI would be “pushing back work on other initiatives, such as advertising, AI agents for health and shopping, and a personal assistant called Pulse.”

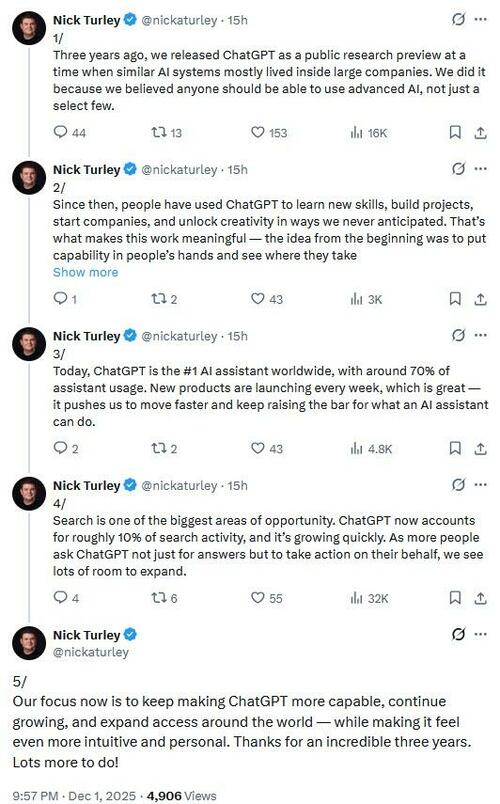

OpenAI’s head of ChatGPT, Nick Turley, said on X that “search is one of the biggest areas of opportunity. ChatGPT now accounts for roughly 10% of search activity, and it’s growing quickly.” He added that “our focus now is to keep making ChatGPT more capable, continue growing, and expand access around the world — while making it feel even more intuitive and personal.”

Altman’s companywide memo is the clearest indication yet of the pressure OpenAI is facing from competitors that have narrowed the startup’s lead in the AI race, especially Google, which released a new version of its Gemini AI model last month that surpassed OpenAI on industry benchmark tests and sent the search giant’s stock soaring.

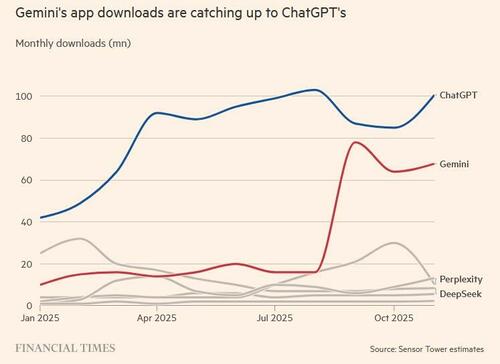

Gemini’s user base has been climbing since the August release of an image generator, Nano Banana, and Google said monthly active users grew from 450 million in July to 650 million in October. OpenAI is also facing pressure from Anthropic, which is becoming popular among business customers.

As the chart below shows, Gemini is rapidly closing the gap on monthly downloads (100.8M vs. 67.8M in November)…

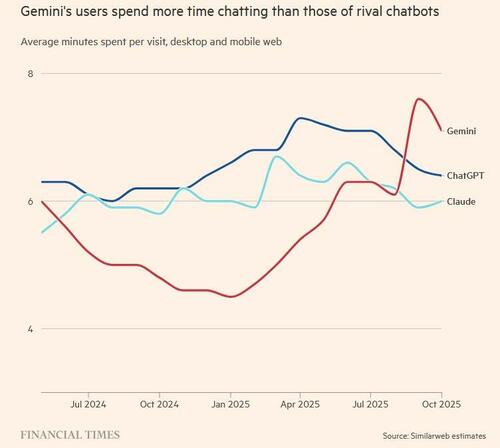

… and users now spend more time chatting in Gemini than rival chatbots such as ChatGPT or Claude.

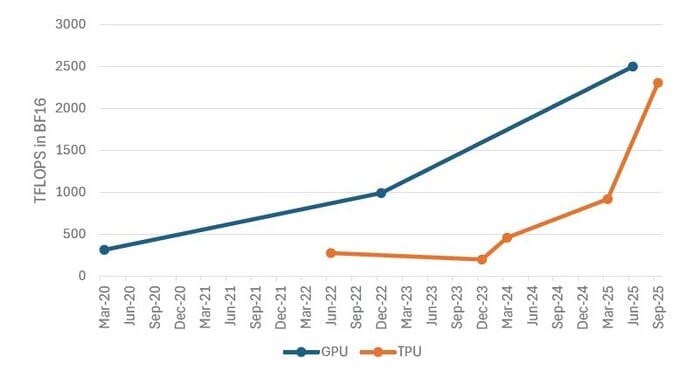

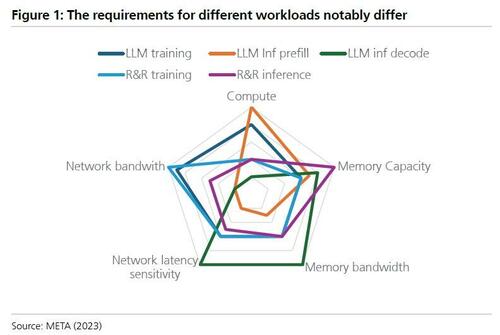

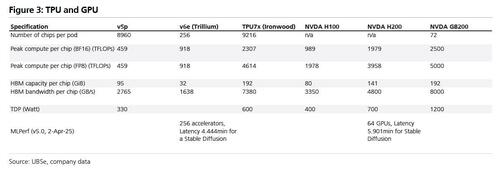

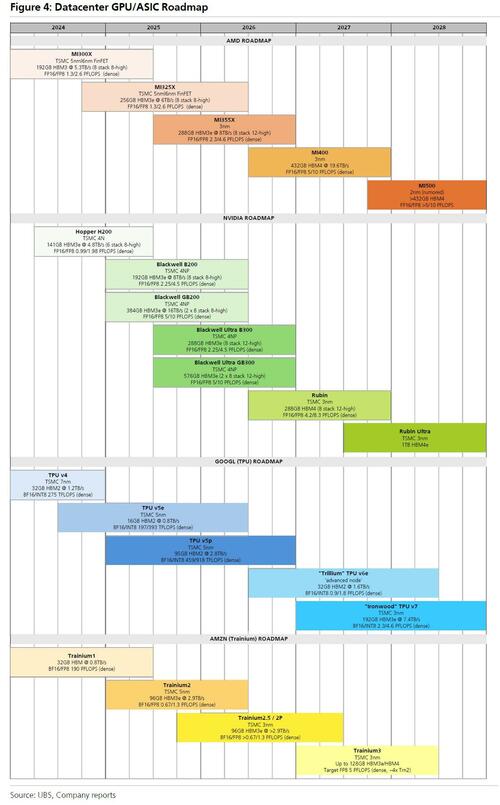

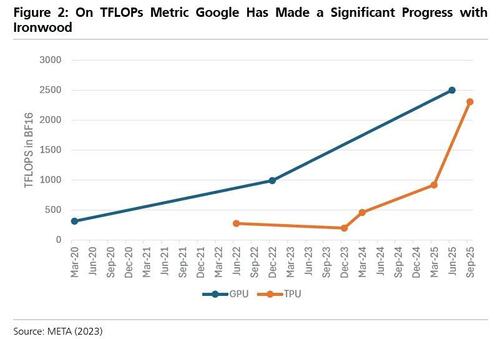

As we reported previously in “The Google TPU: The Chip Made For The AI Inference Era“, Google announced its latest Ironwood chip in April 2025 and made it generally available in November 2025. According to Google the chip is optimized for LLMs, Mixture-of-Experts (MoE), and advanced reasoning and supports training, fine-tuning and inferencing workloads. This contrasts with prior generations of TPUs which were more narrowly customized.

In a note from UBS tech analyst Tim Arcuri (available to pro subs), he explains that Trillium (Gen 6) was optimized for inferencing workloads and carried notably lower HBM capacity vs v5p (32GB vs 95GB).

Arcuri notes that Ironwood has not been submitted to MLCommons’ MLPerf v5.1 datacenter training benchmark (the last round was November 12, 25), but given more compute resources, FP8 support, and far more HBM, he expects its per-chip performance to be notably above that of Trillium. Ironwood also advances TPU scale up with domains of up to 9,216 TPUs (vs 8,960 for v5p and just 256 for Trillium).

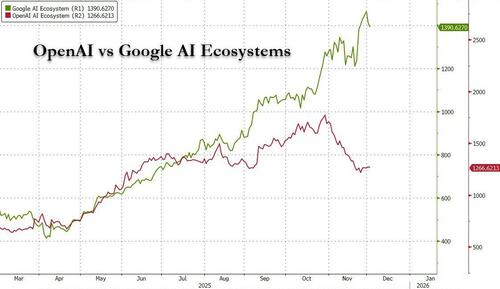

And here is why the entire NVDA ecosystem has been dramatically underperforming that of GOOGL…

… which is enjoying the spike in interest in its TPU product.

The launch of Gemini 3 pushed Google’s AI app higher in the US and UK iPhone app store rankings. Still, ChatGPT has held on as the top AI app, according to Sensor Tower, which tracks mobile usage.

Koray Kavukcuoglu, Google’s AI architect and DeepMind’s chief technology officer, said that the Big Tech group had “pushed our performance quite significantly” by training its AI models using Google’s own bespoke chips, the FT reported. The company also said it was integrating its latest AI models into products immediately. The company also said it had improved the methods used to train its models, an issue that OpenAI has struggled with recently.

Here UBS chimes in that while the bank expects Google is open to the idea of broadening TPU ecosystem over time, any efforts to do so must limit potential cannibalization of GCP revenues. From that standpoint META and AAPL are both prime candidates for internal TPU capacity as they have large AI efforts supporting internal workloads, vast internal AI fleets, and relatively small levels of reliance on GCP (limiting risk to GCP AI cloud revenues). Furthermore, TPU is optimized for the JAX framework but also supports PyTorch through a PyTorch/XLA package, making the integration of META stack relatively easy. Still, any effort to stand up a TPU deployment would have to be weighed against the resources allocated to the META internal ASIC and AMD ramps.

Before the launch of Gemini 3, Altman had said that OpenAI would “need to stay focused through short-term competitive pressure . . . expect the vibes out there to be rough for a bit”.

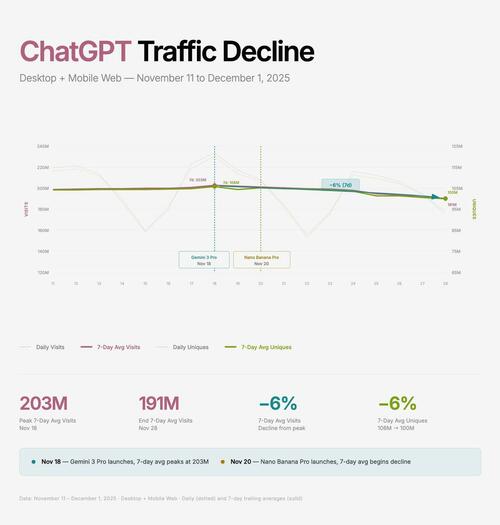

And another reason why the vibes will get rougher, as Deedy Das shows, in the 2 weeks since the Gemini launch, ChatGPT unique daily active users (7-day average) are down -6%. Expect this trend to continue.

Bottom line: with more than 800 million weekly users, OpenAI – with its $1.4 trillion in circle-jerk commitments stretching into the 2030s on the provision that it remains the most important player in the industry – still has a dominant market share in overall chatbot usage but people are now spending more time chatting with Gemini than ChatGPT, and Google is clearly stealing users away from OpenAI.

So does Google’s ascendancy with TPUs and Gemini mean Nvidia – the world’s most valuable company – is also facing a similar “code red”?

Here UBS chimes in that in a call with the company, NVDA highlighted its strong relationship with GCP, noting that Google uses both TPUs and GPUs for Gemini inferencing workloads. According to UBS, NVDA feels cloud vendors are unlikely to run TPU in their cloud stacks given extensive workload optimization needed to achieve TCO advantages on ASICs.

Furthermore, NVDA does not believe its performance advantage against peers is narrowing so far. For the C2026 outlook, NVDA noted that Anthropic’s 1GW capacity and HUMAIN’s 600k-unit expansion are incremental to its $500B C25-C26 order number – offering potential upside.

Finally, NVDA’s CPX chip is targeting advanced coding applications that require vast 1M+ token context windows; NVDA has not officially sized the market, but it previously suggested that context windows applications are ~20% of the inference market.

In short, while OpenAI does indeed have reason to panic – with its $1 so far this turbulence is confined to the company.

More in the full UBS note available to pro subs.

Loading recommendations…